I S K O

Encyclopedia of Knowledge Organization

Science mapping

by Eugenio PetrovichTable of contents:

1. Introduction

1.1 Structure of the entry

1.2 Three caveats about this entry

2. A brief history of science mapping

2.1 Ancestors of science maps

2.2 Modern science mapping

3. Building a science map: the general workflow

3.1 Data sources for science mapping

3.2 Field delineation

3.3 Data cleaning and pre-processing

3.4 Network extraction

4. Types of science maps

4.1 Citation-based maps: 4.1.1 The nodes in citation-based maps: publications and aggregates of publications; 4.1.2 The links in citation-based maps: direct citations, bibliographic coupling, and co-citations; 4.1.3 Normalization; 4.1.4 Visualization; 4.1.5 Enriching the map

4.2 Term-based maps: 4.2.1 Classic co-word analysis and the strategic diagrams; 4.2.2 Co-word analysis based on automatically extracted terms

4.3 Other network-based maps: 4.3.1 Co-authorship networks; 4.3.2 Interlocking editorship networks

4.4 Other types of science maps: 4.4.1 Maps based on patents data; 4.4.2 Geographic maps of science

5. The representation of time in science mapping

6. Interpreting a science map

7. Science maps and the philosophy of science

7.1 On the objectivity of science maps

7.2 Published science vs. science in the making

7.3 The meaning of citations

8. Science maps and science policy

9. Conclusion

Acknowledgments

Endnotes

References

Appendix: Science mapping tools: (1) CiteSpace; (2) VOSviewer

ColophonAbstract:

Science maps are visual representations of the structure and dynamics of scholarly knowledge. They aim to show how fields, disciplines, journals, scientists, publications, and scientific terms relate to each other. Science mapping is the body of methods and techniques that have been developed for generating science maps. This entry is an introduction to science maps and science mapping. It focuses on the conceptual, theoretical, and methodological issues of science mapping, rather than on the mathematical formulation of science mapping techniques. After a brief history of science mapping, we describe the general procedure for building a science map, presenting the data sources and the methods to select, clean, and pre-process the data. Next, we examine in detail how the most common types of science maps, namely the citation-based and the term-based, are generated. Both are based on networks: the former on the network of publications connected by citations, the latter on the network of terms co-occurring in publications. We review the rationale behind these mapping approaches, as well as the techniques and methods to build the maps (from the extraction of the network to the visualization and enrichment of the map). We also present less-common types of science maps, including co-authorship networks, interlocking editorship networks, maps based on patents’ data, and geographic maps of science. Moreover, we consider how time can be represented in science maps to investigate the dynamics of science. We also discuss some epistemological and sociological topics that can help in the interpretation, contextualization, and assessment of science maps. Then, we present some possible applications of science maps in science policy. In the conclusion, we point out why science mapping may be interesting for all the branches of meta-science, from knowledge organization to epistemology.

1. Introduction

Science maps, also known as scientographs, bibliometric network visualizations, and knowledge domain maps, are visual representations of the structure and dynamics of scholarly knowledge. They aim to show how → disciplines, fields, specialties, authors, keywords, or publications relate to each other (Börner, Chen and Boyack 2005; Chen 2013; Osinska and Malak 2016; Rafols, Porter and Leydesdorff 2010; Small 1999; Van Raan 2019). Science maps are usually generated based on the analysis of large collections of scientific → documents (Börner 2010; Cobo et al. 2011b).

Science mapping is the body of methods and techniques that have been developed to generate science maps. Science mapping has a long tradition in bibliometrics and scientometrics, i.e., the quantitative studies of science (Chen 2017; Van Raan 2019). In the last decades, it has increasingly become an interdisciplinary area, witnessing important contributions from → data science, where science mapping belongs to the larger and increasingly important area of information visualization (Börner, Chen and Boyack 2005).

Science maps have several applications. They help to answer questions such as: What are the main topics within a certain scientific domain? How do these topics relate to each other? How has a certain scientific domain developed over time? Who are the key actors (researchers, institutions, journals) of a scientific field? Science maps help to investigate how the structural units of science relate one another at the micro and macro level (Leydesdorff 1987), what factors determine the emergence of new scientific fields and the development of interdisciplinary areas (Leydesdorff and Goldstone 2014), and, more generally, how scientific change functions (Leydesdorff 2001; Lucio-Arias and Leydesdorff 2009). At the same time, the information made accessible by science maps can be highly relevant for science policy purposes.

Science maps, and especially the global maps, also known as “atlases of science” (see Section 3.2: Field delineation), can help to → classify the sciences by showing their mutual relationships (e.g., by showing the citation flows between fields). In this sense, science maps are useful tools in → knowledge organization and have been used to build classification systems with a bottom-up approach (see e.g., Waltman and van Eck 2012). However, standard methods of science mapping are not based on and do not result in semantic relationships between categories (e.g., genus-species relation) but association measures between units of analysis (e.g., co-citation strength between publications, or co-authorship association between authors). The closest to semantic relations that can be produced by standard science mapping approaches is the relation of inclusion obtained by clustering techniques, in which higher-order clusters include lower-order clusters (see Section 4.1.5: Enriching the map). Science maps, hence, are not meant to replace taxonomies, classificatory schemes, ontologies, and other classic → knowledge organization systems (KOS) (Hjørland 2013; Mazzocchi 2018). Rather, they can integrate them by providing extra information on the structure of science based on the analysis of citation networks and other kinds of scientific networks. At the same time, the application of science maps is not restricted to knowledge organization but extends to the sociology of science and science policy.

1.1 Structure of the entry

This article is an introduction to science maps and science mapping methodology. It is structured as follows. Section 2 offers a brief overview of the history of science mapping. Section 3 presents the standard workflow behind a science map and the preliminary steps of science mapping: data collection, field delineation, data pre-processing, and network extraction. Section 4 examines the different types of science maps that can be generated from network data. Section 4.1 is devoted to citation-based maps, i.e., those maps that are based on publications (or aggregates of publications) and citations (or citation-based relations) between publications. This section describes in details some procedures that are common also to other science maps, such as the normalization of the raw relatedness scores, and the two most diffused visualization approaches, the graph-based and the distance-based. It also presents some techniques that can be used to complement the results of mapping and ease the interpretation of science maps, such as clustering. Section 4.2 discusses term-based maps, i.e., those maps that are based on the analysis of the titles, abstracts, keywords or bibliographic descriptors of scientific publications. We will first present the classic co-word analysis as developed in the sociology of science and then focus on maps based on terms extracted automatically with natural language processing techniques. Section 4.3 briefly overviews science maps based on co-authorship and interlocking editorship networks, whereas Section 4.4 reviews science maps based on patents and geographic maps of science. Section 5 discusses different strategies to include the dimension of time into science maps. Section 6 is devoted to the last step in the science mapping workflow, namely interpretation, and to discuss some general issues of science mapping, such as the importance of the level of analysis and the applicability of science mapping to the humanities. In Section 7, some epistemological topics, which bridge across science mapping, sociology of science, and philosophy of science are discussed: the objectivity of science maps, the relationship between the published side of science and the scientific practice, and the meaning of citations. Section 8 overviews the potential applications of science maps in science policy. Lastly, the Conclusion will sketch how science mapping may be of interest for all the disciplines that compose meta-science. In the Appendix, two tools currently available for producing science maps, CiteSpace and VOSviewer, are briefly reviewed.

1.2 Three caveats about this entry

This entry focuses on conceptual, theoretical, and methodological issues of science mapping rather than on the rigorous mathematical formulation of science mapping techniques, as the basic ideas behind the techniques can often be understood without reference to the formal machinery. Relevant technical literature will be pointed out in the references.

Secondly, we will focus on the methodology of science mapping, rather than on specific exemplars of science maps. We aim to provide the readers with the tools to understand and independently assess the science maps they will encounter (or produce!), rather than offer our opinion on existing maps. A wonderful collection of science maps can be found in the Atlas of Science by Katy Börner (Börner 2010) and in the exhibit Places and Spaces: Mapping Science, which popularizes the topic of science mapping to the large public all over the world since 2005 [1].

Lastly, science mapping is not a static research field, but it is constantly moving forward. New mapping methods are developed, old algorithms are dismissed, science mapping tools are refined, larger maps are built as higher computing capacity becomes available. Therefore, it is not uncommon to find disagreement in the current science maps literature (e.g. Boyack and Klavans 2010). In this article, we will try as far as possible to remain neutral concerning these discussions, presenting to the reader the different options without taking a position.

2. A brief history of science mapping

Modern science mapping relies on the data provided by large, multidisciplinary databases that index vast portions of the scientific literature (see Section 3.1: Data sources for science mapping). Before the creation of these databases in the 1960s, it was virtually impossible to generate science maps in the modern sense. The idea of representing the structure of human knowledge by visual aids, however, dates far back in history.

2.1 Ancestors of science maps

Already in the Middle Age, the relationships between the seven liberal arts, comprising the trivium and quadrivium, were visually represented by allegories [2]. However, the most popular visual metaphor in history for visualizing knowledge has been the tree (Lima 2014). Its origins can be traced back to Aristotle, and to the Isagoge, an introduction to Aristotle’s logic written by Porphyry in the 3rd Century. In the 13th Century, Ramon Llull depicted a tree of the sciences in his Arbor Scientiae (1295). Descartes, in the Principia Philosophiae (1644), used the same image to explain the relationship between metaphysics, physics, and the applied sciences. During the Enlightenment, the famous Encyclopédie of Diderot and d’Alambert contained a tree-like taxonomy of human knowledge (“Système figuré des connaissances humaines”). Similar structures can be found also in the 19th Century, in philosophical treaties on the classification and organization of the sciences [3].

2.2 Modern science mapping

The tree-like representations of the sciences in the past had usually a philosophical aim. They served to reflect on the most general principles that underlie human knowledge. At the same time, they aimed at organizing scientific and scholarly disciplines, by creating hierarchies between them. Often, they were proposed with a normative spirit: more than describing the actual organization of knowledge, they wanted to reform and improve it. What they all shared was a “top-down” approach. Starting from a certain idea of human knowledge and a certain set of classificatory categories, a taxonomy was devised, which was then used to categorize the individual items of knowledge, such as books or scientific papers. The Dewey Decimal Classification, a → library classification system developed in 1876, epitomizes such a top-down approach.

The creation of the Science Citation Index (SCI) in the 1960s by Eugene Garfield at the Institute for Scientific Information, allowed for a first time a bottom-up approach. As we will see better in the next sections (see Section 3.4: Network extraction), the SCI indexed the citation-links between the articles published in scientific journals. In this way, it allowed to reconstruct the network in which each scientific article is embedded, and, by connecting all these networks, to reconstruct the structure of entire scientific areas. In this way, a new method to map human knowledge became possible. The historian of science Derek De Solla Price was the first to suggest such an idea in 1965 (Price 1965). Garfield himself proposed the method of historiographs to reconstruct the temporal development of scientific ideas by analyzing the citation links between publications (Garfield 1973) (see Section 5: The representation of time in science mapping).

In the 1960s and 1970s, two new techniques, both based on citations, were developed to measure the association of scientific papers: bibliographic coupling (Kessler 1963) and co-citation (Small 1973; Marshakova 1973). They soon became standard techniques for science mapping (see Section 4.1.2: The links in citation-based maps). Henry Small started to use co-citation analysis to map scientific areas and study their evolution over time. He generated the first science maps based on co-citation analysis in 1977 to study the field of collagen research (Small 1977).

In the 1980s, new methods of analysis were developed, such as author co-citation analysis (White and Griffith 1981) and co-word analysis (Callon et al. 1983). At the same time, the technical aspects of science mapping were discussed and sometimes disputed (Leydesdorff 1987). The 1990s saw important advancements in computer visualization techniques and, in 1991, the first science mapping program for the personal computer, SCI-map, was made available. In the 2000s, the improvement of computer capacity allowed to produce the first global maps of science, based on the analysis of thousands of journals and millions of publications. New user-friendly science mapping tools, such as CiteSpace and VOSviewer, were launched in the 2010s, so that nowadays also the non-experts can generate their own science maps. In the last twenty years, science mapping has become an increasingly interdisciplinary area, with important contributions from computer scientists and experts in information visualization, and the last ten years have seen what has been called a “Cambrian explosion of science maps” (Börner, Theriault and Boyack 2015) [4].

3. Building a science map: the general workflow

The construction of a science map follows a general workflow that comprises the following steps (Börner, Chen and Boyack 2005; Cobo et al. 2011b):

- Data collection. Based on the research question of the analyst, the data for the mapping are collected. In principle, any relational feature of the scientific activity can be collected by different methods. In practice, however, most science mapping studies are based on data stored in bibliographic data sources. Hence, the data collection consists in individuating appropriate queries to extract bibliographic data from those sources.

- Pre-processing. The raw data are cleaned and, if needed, further selected (for instance, only publications cited over a certain threshold are retained). This step is crucial since the goodness of the mapping depends on the quality of the underlying data.

- Network extraction. Depending on the chosen unit of analysis (publication, term, author, journal, institution, etc.) and the kind of analysis (direct linkage, co-citation, bibliographic coupling, co-word analysis, etc.) the corresponding network is extracted from the data.

- Normalization. Usually, the relatedness scores (e.g., the raw number of co-citations between publications) are not directly used to generate the science maps because experience and experimentation have shown that they can create distortions due to the different sizes of the items (Boyack and Klavans 2019). It is thus a common practice to perform normalization on the raw values using similarity measures.

- Visualization. There are different options to visualize the network. In graph-based visualizations, graph drawing algorithms are used. In distance-based visualizations, dimensionality reduction techniques are used to plot the data into a two-dimensional (or, more rarely, three-dimensional) layout, so that the distances between the points on the map reflect the similarity of the units of analysis.

- Enrichment. The elements of the map can be enriched to provide more information. Frequently, clustering techniques are used to find groups of similar nodes and colors are used to distinguish nodes belonging to different clusters.

- Interpretation. The science map is interpreted, usually with the help of experts in the mapped domain. The visual nature of the map enables the recognition of patterns and structures, which can provide an answer to research questions or help in addressing science policy issues.

In the next sections, we focus on the first three steps of science mapping: data collection, data pre-processing, and network extraction. Based on the type of network extracted, different types of science maps can be generated. In Section 4, each type is examined in detail. Note that citation-based maps will allow us to describe the steps of normalization, visualization, and enrichment that recur also in the generation of other types of science maps. Section 5 is an excursus on how time can be represented in science maps, whereas Section 6 discusses the last phase of science mapping, i.e., the interpretation.

3.1 Data sources for science mapping

Science mapping is a methodology that can be applied, in principle, to a variety of data regarding the scientific enterprise. In practice, however, the main data sources for science mapping are bibliographic databases. Other types of data must be collected by the analysts.

Bibliographic databases used to this purpose are large multi-disciplinary databases that collect the → metadata of academic publications (authors, title, abstract, keywords, affiliation of the authors, publication year, etc.) along with their citations (hence their name of citation indexes). The main citation indexes are Clarivate’s Web of Science (WoS), Elseviers’ Scopus, and Google Scholar.

Recently, two open bibliographic databases have joined Google Scholar: Microsoft Academic (launched in 2006, it stopped being updated in 2012 and was relaunched in 2016 [5]) and Dimensions (launched in 2019 [6]). Moreover, in 2017 Crossref, a not-for-profit organization of publishers, has made its citation data openly available. Comparisons between the coverage of these new databases and the coverage of traditional databases are currently being undertaken by the bibliometric community (Visser, van Eck and Waltman 2020; Harzing 2019).

In addition to multi-disciplinary databases, there are also specialized databases, focusing on specific disciplines (e.g., PubMed for medicine, and PsycInfo for psychology). Patent data can be retrieved from specific data sources such as the United States Patent and Trademark Office [7], Google patents [8] and the database of the European Patent Office [9].

More detailed information about these databases can be found in → the dedicated entry of this encyclopedia (Araújo, Castanha and Hjørland 2020).

3.2 Field delineation

To produce a science map, we first need to individuate a set of publications that reasonably represent the target of the mapping. In bibliometrics, this step is often called field delineation. Field delineation is the collection of documents that are both relevant and specific for the purpose of the mapping (Zhao 2009).

At this point, an important difference can be made between global and local maps of science. Global maps of science (also known as “atlases of science”) aim to map the whole science (Börner et al. 2012; Boyack, Klavans and Börner 2005; Boyack and Klavans 2019). To produce such maps, the main criteria is to maximize coverage. Local maps of science, on the other hand, focus on a limited portion of the scientific literature (Rafols, Porter and Leydesdorff 2010). Such a portion can be a scientific field, a specialty, a research topic, or the publication output of a university. In all these cases, the accurate selection of the target publications is a crucial step since an unrepresentative or wrong set of publications will produce a misrepresentation of the target.

Following Zitt and colleagues, we distinguish three general strategies for field delineation: (A) rely on external formalized resources, such as ready-made science classifications; (B) create ad hoc information retrieval searches; (C) use network exploration resources (i.e., science mapping itself) (Laurens, Zitt and Bassecoulard 2010; Zitt et al. 2019; Zitt and Bassecoulard 2006).

The first strategy is based on ready-made classifications, such as the ones used by Web of Science or Scopus to classify their records. Other classificatory schemes are produced in institutional settings (e.g., by research evaluation agencies or by research councils) and, clearly, by libraries. Note that sometimes the journal, rather than the individual article, is the unit of classification, with the articles inheriting the category of the journals where they are published. Following this first strategy, representative literature is retrieved by using these ready-made classifications at different levels of granularity (scientific field, specialty, sub-area, etc.). An evident shortcoming of this strategy is that it heavily relies on the goodness of the chosen classifications.

The second strategy is based on creating, usually in close interaction with domain experts, ad hoc searches to query the databases. These queries can potentially include any searchable part of the bibliographic records: words in titles and abstracts, keywords, authors, affiliations, journals, dates, references, and so on. A typical query combines a search for specialized journals and a lexical search in complement. Note that the starting queries can be refined, for instance by citation analysis. Once a core set of publications, journals or even key authors is determined, new records are added by following the citations (articles citing the core set) or the references (articles cited by the core set), in an iterative process.

The third strategy relies on science mapping methods and, in particular, on clustering. The basic idea is to use bottom-up clustering techniques that group publications based not on a classificatory scheme, but on their reciprocal relations (for instance, their co-citation strength, see Section 4.1.2: The links in citation-based maps). Techniques of network analysis, as well as experts’ knowledge, are then used to select the relevant clusters. By iterating this procedure, an increasingly precise field delineation is obtained.

All these approaches involve the double risk of losing relevant publications and introducing noise (not relevant publications) in the dataset (Zitt et al. 2019). In fact, there is no fit-to-all solution to field delineation. From an operative point of view, a good strategy is to combine recursively the different approaches, checking each time the set of retrieved publications and refining accordingly the queries (an example of this approach can be found in Chen 2017).

However, it is important to remember that, from a theoretical point of view, there is no ground truth basis for defining research domains in a “purely objective” way. As the ongoing discussion about research areas definition and classification shows, research classification should be conceived as a social process involving multiple actors, from researchers to journals to research evaluation agencies, rather than as a static photograph of the structure of science. Classificatory schemes as well as the boundaries between areas are constantly negotiated and reshaped under the pressure of different social systems and infrastructures (Sugimoto and Weingart 2015). As these systems serve different purposes and are governed by different logics, frictions and inconsistences between the classificatory schemes they produce are to be expected (Åström, Hammarfelt and Hansson 2017). For instance, an article can be classified as belonging to research area X based on the institutional affiliation of its authors and to research area Y based on the topic of the journal where it is published. Even if field delineation is the first step in many bibliometric analyses, including science mapping, its theoretical stakes should not be underestimated.

3.3 Data cleaning and pre-processing

When the field delineation is completed and the datasets are retrieved from bibliographic databases, the data consist basically of large tables, in which each row corresponds to a publication and the columns represent the available meta-data of that publication (e.g., title, authors, abstract, publication year, journal, cited references, etc.). Retrieved data usually contain errors, for instance, misspelling of author names, errors in the cited references, journal titles, and so on. Cleaning the data is a pivotal step in the science mapping workflow because the quality of the results depends on the quality of the data. This task, however, can be highly time-consuming and can present difficult issues, such as the disambiguation of authors with homonym names and the merging of authors with multiple names (Strotmann and Zhao 2012).

After the cleaning, the data can be pre-processed. They can be divided into different time sub-periods to carry out longitudinal studies (see Section 5: The representation of time in science mapping), or a portion of the retrieved data can be furtherly selected based on some measure, such as the most cited articles, the most productive authors, or the journals with the highest performance metrics.

3.4 Network extraction

In general, a network is a structure made of nodes (also called vertices) and links (also called edges). It can be represented as a graph or as a matrix. Networks are valuable tools to represent and study a great variety of natural and social phenomena, from the lineage of a family to patterns of contracts among firms, to the spreading of a virus (Barabási 2014).

In science mapping, we are interested in those networks that can capture the structure of science at different levels and from different points of view. In fact, these networks are the basic structure on which science maps are built.

From the same set of bibliographic records, it is possible to generate different networks, depending on the type of nodes and links we decide to focus on. The nodes will represent the unit of analysis of the final map, whereas the links will represent the type of relationship displayed.

4. Types of science maps

Science maps can be classified into different types depending on the kind of data, and hence the kind of network, they are based on. In principle, any feature of the scientific enterprise that can be represented in relational terms, i.e., as a network of nodes and links, can be used to generate a science map. In citation-based maps, the units of analysis (the nodes) are publications or aggregates of publications (e.g., journals or authors), and the relationships between them (the links) are citations or association measures based on citations (bibliographic coupling and co-citation). In term-based maps, the units of analysis are textual items (themes, keywords or terms) and the relationships are co-occurrence frequencies (e.g., the number of times two keywords are used together in a set of publications). In co-authorship maps, the units are the authors and the links are the number of co-authored publications. In interlocking editorship maps, the units are the journals and the links are the number of persons who are shared between the editorial boards of two journals). In addition to these, there are also science maps based on patents data and geographic maps of science, which will be the topic of Section 4.4.

4.1 Citation-based maps

4.1.1 The nodes in citation-based maps: publications and aggregates of publications

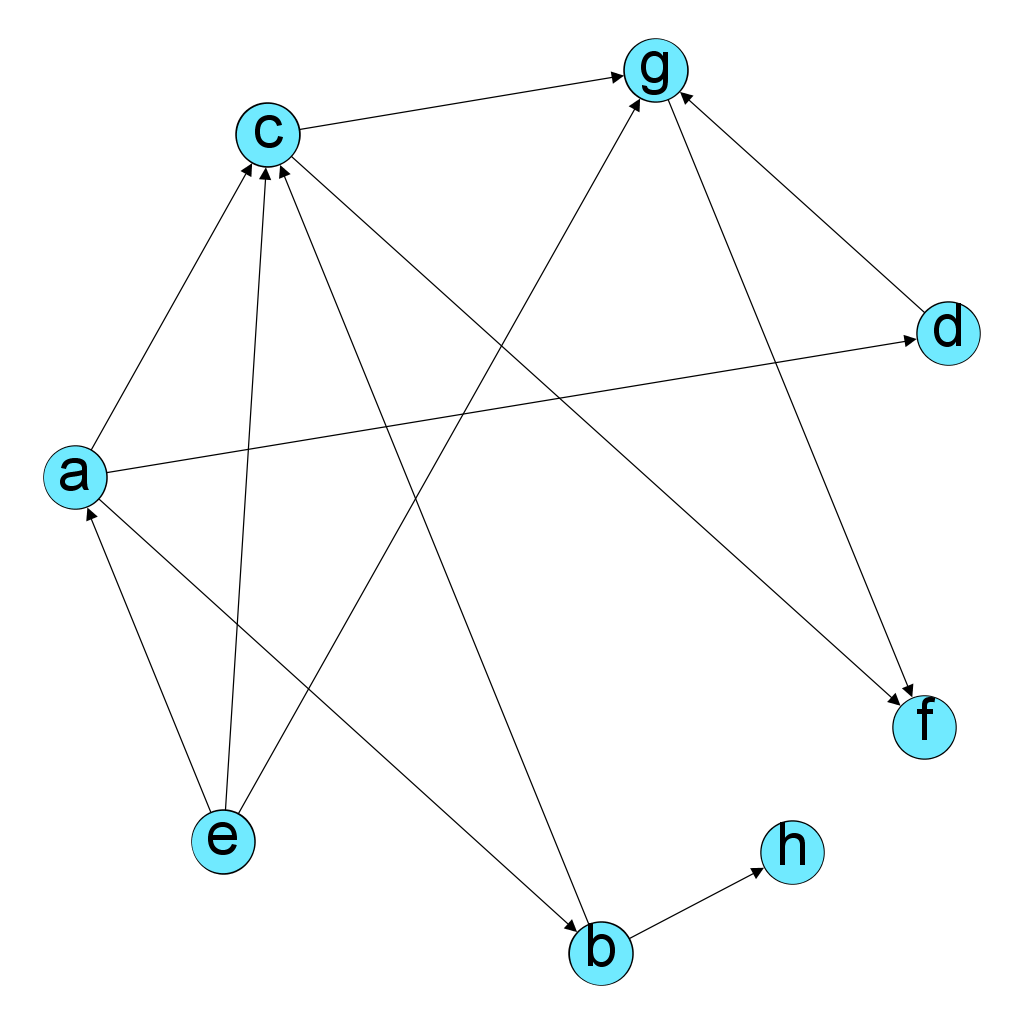

In the most basic citation-based map, the nodes represent individual publications and the links the citations (reference-links) among them. An example of citation network is provided in Fig. 1, where it is visualized as a directed network in which nodes represent publications and arrows represent the reference-links (citations) between them. Some publications are both citing and cited (e.g., publication a), some publications are only cited (e.g., publication f), and some publications cite without being cited (e.g., publication e).

The same information can be represented as an adjacency matrix, whose elements indicate whether pairs of nodes are connected (“adjacent”) in the network or not. When the publication in the row cites a publication in the column, the corresponding element in the matrix is 1, 0 otherwise [10].

a b c d e f g h a 0 1 1 1 0 0 0 0 b 0 0 1 0 0 0 0 1 c 0 0 0 0 0 1 1 0 d 0 0 0 1 0 0 0 0 e 1 0 1 0 0 0 1 0 f 0 0 0 0 0 0 0 0 g 0 0 0 0 0 0 0 0 h 0 0 0 0 0 0 0 0

Since publications are provided with meta-data, such as their authors or the journals in which they are published, it is possible to build aggregates of publications sharing the same meta-data (Radicchi, Fortunato and Vespignani 2012). By aggregating publications at a higher and higher level, we can reach higher units of the analysis and networks based on new types of nodes.

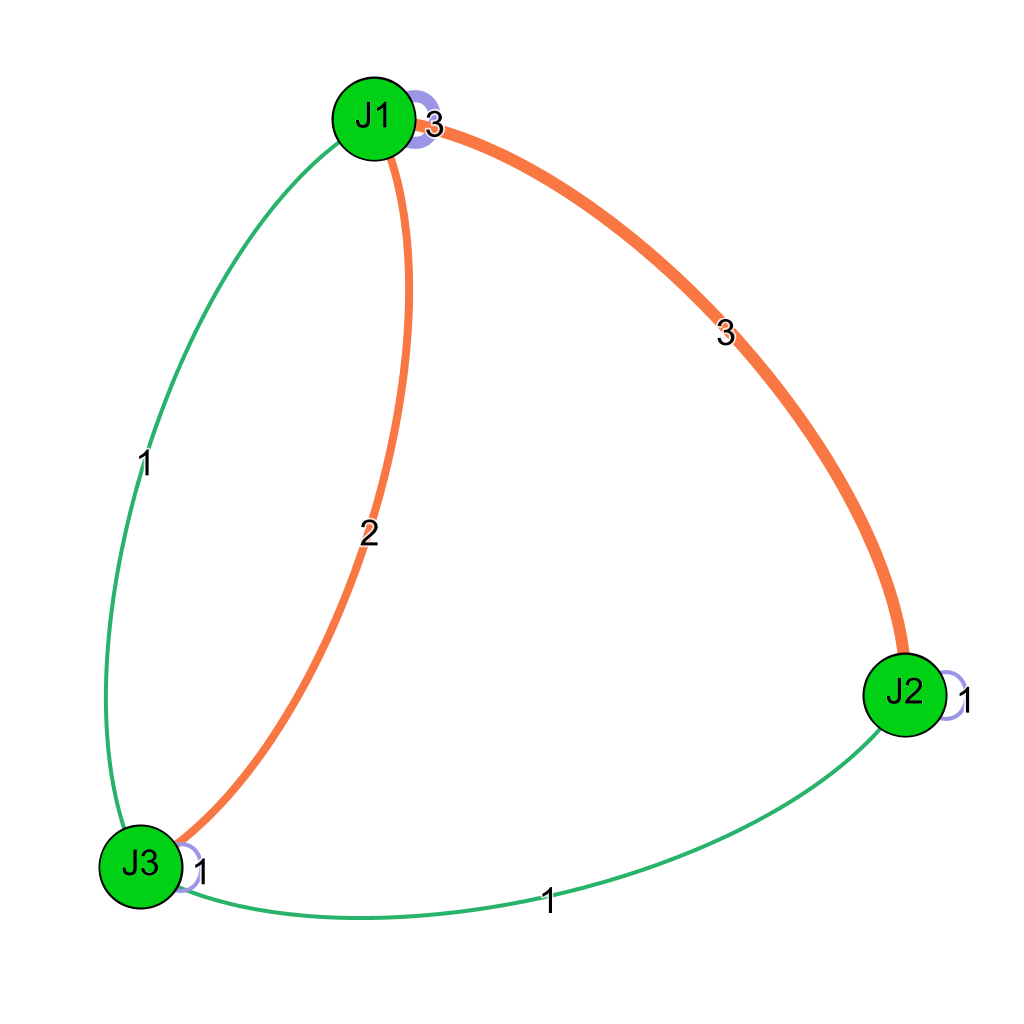

To understand this mechanism, we show how to build a journal citation network (Leydesdorff 2004; McCain 1991) starting from the document citation network of Fig. 1. We start by coloring the nodes according to the journals where they are published, and the citation links according to the journal to which they point (Fig. 2).

The journal citation network is obtained by substituting each article with its journal of publication and then using the journals as nodes of the network. A link between two journals is drawn when they exchange at least one citation. Note that in this new network, it is possible to provide links with weight, that is the number of citations that each journal receives from other journals (or from itself). In the previous network, there was not a proper weight but only an on/off relationship (presence of a reference-link or not). There are also some loops, produced by articles citing articles published in the same journal (these loops correspond to journal self-citations). The resulting journal citation network is shown in Fig. 3.

By the same aggregation process, we can construct author citation networks (e.g., McCain 1990; Radicchi et al. 2009), or reach higher units of analysis, such as institutions and even countries (e.g., Glänzel 2001).

4.1.2 The links in citation-based maps: direct citations, bibliographic coupling, and co-citations

Until now, we considered only citations as links in the citation network. The presence of a reference-link between two publications usually attests that they are somehow associated, for instance, that they share the same topic or research method (see Section 7.3: The meaning of citations). Maps in which the links are direct citations are called “direct-linkage” or “cross-citations” or “inter-citation” maps (Waltman and van Eck 2012). In science mapping, however, there are two other common techniques used to measure the relatedness or strength of association of publications (or their aggregates): bibliographic coupling (Kessler 1963) and co-citation (Small 1973; Marshakova 1973).

In bibliographic coupling, a link between two publications is established when they share at least one publication in their respective bibliographies, i.e. when they have at least one reference in common. The weight of the link is proportional to the number of shared references. Co-citation is, in a certain sense, the reverse of bibliographic coupling. In a co-citation network, a link is drawn between two publications if they are cited together at least by a third publication, and the weight of the link (the so-called co-citation strength) is proportional to the number of common citations they gather (i.e., the number of co-citations).

Fig. 4 shows the bibliographic coupling network generated from the citation network of Fig. 1. Note that publication f has no link with other publications because, in our example, it did not have any cited reference (i.e., no out-going link).

Fig. 5 shows the co-citation network. Analogously, publication e has no link with other publications because it had no citations (i.e., no incoming link).

Note that citations are directed links because we can distinguish between a sender and a receiver of the citation. In network theory terminology, they are called arcs (Wasserman and Faust 1994). In contrast, bibliographic coupling and co-citation links are un-directed links because bibliographic couplings and co-citations are symmetrical. In network theory terminology, they are called edges (Wasserman and Faust 1994).

Starting from a matrix whose rows are the citing publications and columns are the cited publications, it is possible to derive by matrix algebra operations the two co-occurrence matrices representing the bibliographic coupling network or the co-citation network (Van Raan 2019).

Note that direct citations, bibliographic coupling, and co-citation analysis can be applied not only to single publications, but also to aggregates of publications. For instance, if authors are used as units in co-citation analysis, we have author co-citation analysis (e.g., White and McCain 1998; Kreuzman 2001), if journals are used as units, we have journal co-citation analysis (e.g., McCain 1991). By combining in this way methods and units of analysis, several types of bibliometric networks can be generated (Waltman and van Eck 2014). Fig. 6 shows an example of co-citation map using individual publications as units of analysis.

4.1.3 Normalization

Usually, the raw frequencies of citations, bibliographic couplings or co-citations are not directly used as input of the visualization process that leads to the final form of the science map. This is because the raw frequencies do not properly reflect the similarity between the items (van Eck and Waltman 2009). To understand why, suppose that department AA and department BB publish comparable articles, but department AA, having more researchers than department BB, publishes 10 times more articles. Other things being equal, one would expect that the articles from department AA will receive in total about ten times as many citations as the articles from department BB and thus to have about ten times as many co-citations with other departments in the same discipline as department BB. However, the fact that department AA has more co-citations with other departments than department BB does not indicate that it is more similar to other departments than department BB. It only shows that department AA publishes more articles than department BB because it is bigger. To correct such a distortion due to the different size of the units of analysis, we need to transform the raw co-citation scores, adjusting them by some stable quantity, an operation called “normalization” (Eck et al. 2010).

In science mapping, similarity measures are used to perform such a normalization. Following Ahlgren and colleagues, we distinguish two main approaches to calculating these similarities: the local or direct and the global or indirect (Ahlgren, Jarneving and Rousseau 2003). In the former approach, the focus is on the co-occurrence frequencies of the items, that are then adjusted for different quantities. Examples include the cosine (the most popular one), the association strength (used in VOSviewer, see the Appendix), the inclusion index, and the Jaccard index (Eck and Waltman 2009). In the latter approach, the focus is on the way two items are related to all the other items in the dataset under study. This means that what is compared to obtain the similarity between two items are their entire profiles, i.e., the entire rows (or columns) of the co-occurrence matrix, and not their simple co-occurrence frequency. Pearson’s correlation coefficient (r), the cosine [11], and the chi-squared distance are examples of indirect similarity measure based on the global approach (McCain 1990; White and Griffith 1981). However, the reliability of Pearson’s r as a similarity measure has been contested (Ahlgren, Jarneving and Rousseau 2003; Eck and Waltman 2008).

In general, there is no agreement on what the best normalization procedure is and on what similarity measures should be used in science mapping (Boyack and Klavans 2019; Leydesdorff 2008; Van Raan 2019). In the scientometric community, the discussion still goes on after 35 years (e.g., Zhou and Leydesdorff 2016). However, it is important to remember that, depending on the chosen procedure, the resulting science maps can be rather different (Boyack, Klavans, and Börner 2005).

4.1.4 Visualization

Visualization is the step in the science mapping process in which the information contained in the network is displayed in a visual layout comprehensible to human understanding. Following Waltman and van Eck (2014), we distinguish two basic types of visualizations: graph-based and distance-based. They are not the only approaches available but are probably the most common in science mapping [12].

In graph-based visualization, the network is visualized as a graph made of nodes and edges (Fig. 1, 2, 3, 4, and 5 are examples of graph-based visualizations). The edges (links between nodes) are displayed to indicate the relatedness of nodes. The most common technique for creating such graphs are force-directed graph drawing algorithms, such as the Kamada and Kawai and the Fruchterman and Reingold (Chen 2013).

To understand the underlying mechanism of these algorithms, imagine the network as a physical system, in which the nodes are little balls electrically charged and the links are springs that connect them. The electric charge creates a repulsive force between the balls, counterbalanced by the attractive force generated by the springs. The algorithms basically simulate the network as such a physical system made of balls and springs and apply two opposite forces to the nodes, one attractive (proportional to the weight of the link between two nodes) and the other repulsive, until the system comes to a state of mechanical equilibrium. The final layout is the one corresponding to such an equilibrium state. Note that several configurations are possible since usually there is no unique equilibrium state.

Force-directed graph drawing algorithms are implemented in software for network analysis and visualization, such as Gephi [13] and Pajek [14]. An example of a graph-based science map created with Pajek and visualized with the Kamada and Kawai algorithm can be found in (Leydesdorff and Rafols 2009, fig. 4). An example of a graph-based science map created with Gephi can be found in (Weingart 2015, fig. 4)

The other visualization approach is distance-based. In distance-based visualizations, the distance of nodes on the map reflects their similarity, so that similar nodes are placed closer and dissimilar nodes far away. Note that in graph-based visualization, on the other hand, the position of the nodes is not directly related to their similarities, but it is a product of the “pull and push” mechanism of the drawing algorithm. In distance-based visualizations, links can be shown or not.

Distance-based visualizations are conceptually closer to geographic maps than graph-based visualizations. Geographic maps represent relationships in space by placing objects that are close in the physical space near on the maps and objects that are distant in the physical space further apart on the map. Distance-based maps have the same goals, but instead of being based on physical distances, they are based on similarities between objects.

To produce a distance-based visualization, therefore, the similarities between the nodes must be transformed into distances [15]. The distance matrix that is thus obtained is conceptually analogous to the table reporting the distances between pairs of cities in a geographic atlas. The task consists of reconstructing from the relative distances the positions of the items on the map, i.e., in finding the coordinates of the items in a two-dimensional space starting from their reciprocal distances.

To fulfill this task several statistical techniques have been developed. The most important belong to the family of multi-dimensional scaling (MDS) methods (Borg and Groenen 2010). They aim to find the coordinates of the points in a lower-dimensional space (usually, a plane) such that the distances of the points on the lower-dimensional space reflect as accurately as possible the original distances of the points. The average difference between the distances on the map and the original distances tells us how much the map distorts the original configuration. The amount of distortion is used to calculate the stress of the map. The various algorithms for MDS essentially adjust the positions of the points until a minimum value of stress is reached [16].

It is important to underlie that distance-based visualizations can be rotated, flipped, and mirrored. Since the output of MDS is not a set of fixed coordinates but a set of relative distances between the points, any geometrical transformations that leave them unaltered can be applied. An example of distance-based visualization can be found in (White and McCain 1998, fig. 2).

When interpreting the output of MDS, that is usually a two-dimensional map, is it very important to be aware that the algorithms can generate visual artifacts, i.e., structures or patterns that are visible on the map but that are not present in the original data. For instance, Eck and co-authors (2010) note that variants of MDS tend to produce quasi-circular layout when used on big matrices and that they tend to locate items with a high number of co-occurrences toward the center of the map.

However, the trickiest artifacts have to do with the issue of dimensionality reduction, i.e. with the very core of MDS. Imagine that we have four points in a three-dimensional space, each one located at the same distance from the others, like the vertices of a three-sided pyramid, all sides of equal length (Borg and Groenen 2010, chap. 13.3). When we try to place the four points in a two-dimensional plane, we can respect the equal distance only for three points out of four. The fourth point will lie almost at the center of a bi-dimensional triangle (as if we were looking at the pyramid from the above) so that its distance from the other points will always be shorter than the distances between the three points themselves [17]. Without knowing the original three-dimensional structure and by looking only at the two-dimensional map, we would wrongly conclude that the fourth point is closer to the other three. The wrong conclusion raises from the fact that the two-dimensional map necessarily distorts the three-dimensional structure because it suppresses the third dimension, which however carries essential information (the equal distance between the fourth point and the other three points). By losing such information, it introduces an artifact. Interestingly, MDS can generate also the opposite artifact: points that are placed far away in the map can be however connected by “tunnels” in hidden dimensions (Leydesdorff and Rafols 2009). Imagine a paper sheet with two distant points on it: if we bend the sheet, we can make the two points very close in the third dimension, realizing a “tunnel” between them. If we consider only their distance on the two dimensions of the sheet, however, they will appear to be distant.

In sum, dimensionality reduction techniques do allow us to obtain significant insights into the structure of bibliometric networks because they reveal the main features of such a structure. At the same time, since those features may lie in more than two dimensions, one must be aware of the inevitable distortions introduced by the dimensionality reduction itself.

4.1.5 Enriching the map

With the visualization of the network, we reach the basic form of the science map. At this point, the interpretation of the map can already begin. However, it is common to enrich the basic form by displaying further information on it.

A first option is to use the size of the nodes and the width of the links to convey their properties. In a co-citation map, for instance, the size of the nodes can be used to represent the number of citations collected by the units of analysis (publication, journal, author, etc.) and the links can be drawn thicker or darker to express the strength of the connections (e.g., number of co-citations between two nodes). Alternatively, the size of the nodes can be used to represent the centrality of the nodes, using one of the different notions of centrality defined in network theory. The most common include degree centrality, betweenness centrality, closeness centrality, and eigenvector centrality (Wasserman and Faust 1994). The degree centrality of a node is proportional to the number of its links so that it is higher for highly connected nodes. Betweenness is a measure of brokerage of gatekeeping, that is of how much a node is an “obligatory passage” in the network. In science mapping, it is sometimes used to measure interdisciplinarity (Leydesdorff 2007). Closeness measures how close a node is to the other nodes in the network. Nodes with high closeness are the ones that can be reached with few steps [18] from any other node in the network. Lastly, eigenvector centrality is a measure of the influence of a node in the network. The underlying idea of eigenvector centrality is that the influence of a node depends on the influence of the nodes to which it is connected, so that a node connected with other central nodes increases its centrality.

A further option to enrich the map is to use colors to distinguish visually different clusters of nodes. Networks typically display an internal organization in clusters or communities, that is groups of highly interconnected nodes (Radicchi, Fortunato and Vespignani 2012). In distance-based visualizations, clusters result as sets of close points, separated from other clusters by blank space. The techniques of cluster analysis can be used to detect such communities. One common method is hierarchical agglomerative clustering (Chen 2013). All the units of the map (the nodes) begin alone in groups of size one, then, at each iteration of the clustering algorithm, similar groups are merged, until all the nodes belong to one super-cluster. A resolution parameter controls the granularity of the clustering, i.e., the size of the communities. Different agglomerative methods are characterized by the definition of distance between clusters they use and by the metric employed to calculate the distances (there are lots of options besides the familiar Euclidian distance). In single-linkage clustering, the distance between two clusters is set equal to the distance between their closest nodes. In complete-linkage clustering, on the other hand, it is equal to the distance between the most distant nodes in the two clusters. Lastly, in centroid linkage clustering, it is equal to the distance between the “centers” or average points (centroids) of the clusters. These clustering procedures, however, are only a small fraction of the available techniques and algorithms for clustering and community detection. In the last years, the techniques based on modularity, originally developed in physics, are becoming increasingly popular (Thijs 2019) [19].

Clusters can be labelled automatically by extracting terms from the titles, abstracts, and keywords of the publications in the clusters (Chen 2006) (see Section 4.2.2: Co-word analysis based on automatically extracted terms). Each cluster is thus provided with a word-profile and its most relevant words can be superimposed on the map to facilitate the interpretation of the clusters (Chen, Ibekwe-SanJuan and Hou 2010).

A last method for enriching the science map is to use the overlay (Rafols, Porter and Leydesdorff 2010). In science overlay maps, the results of the mapping are laid over a background, that can be, for instance, a global map of science. The background serves as a reference system that facilitates the interpretation of the results. For instance, the scientific output of a university can be overlaid on a global map of science to get an insight into the scientific coverage of the university or its impact (see Section 8: Science maps and science policy).

4.2 Term-based maps

Term-based science maps are used to extract and visualize the intellectual content of a corpus of publications based on the analysis of the terms associated with those publications (Börner, Chen and Boyack 2005). These terms can be the keywords or descriptors of the publications, or they can be extracted automatically from titles and abstracts or even the full texts of articles. Term-based science maps allow to explore at a fine-grained level the intellectual content of publications since titles, abstracts, and keywords are meant to report the main topics, concepts, and results of scientific articles (He 1999; Van Raan and Tijssen 1993).

An important advantage of term-based mapping compared to citation-based mapping is that it applies to fields characterized by the scarce presence of citations, such as applied research and technology (Callon et al. 1983).

Depending on the method by which the terms characterizing a publication are extracted, we can distinguish two types of term-based maps. Classic co-word analysis, developed by Callon and colleagues, is based on human-assigned keywords. Natural language processing (NLP)-based co-word analysis, on the other hand, is based on terms that are automatically extracted from the texts by natural language processing techniques.

Independently of the method, however, co-word analysis rests on some assumptions that have been contested. The main one is that words and terms have a stable meaning across fields and over time so that they can be used as reliable proxies of scientific concepts and ideas (Leydesdorff 1997). However, this assumption may be false, and historians of science have shown that the phenomenon of meaning-shift indeed occurs in science (Kuhn 2000). A possible reply to this criticism is that words in co-word analysis are not used as carriers of meaning but as simple links between texts (Courtial 1998). From an operative point of view, meaning shift can be avoided by restricting the time scope of the analysis to a relatively short period and semantically homogeneous areas (Mutschke and Quan-Haase 2001).

4.2.1 Classic co-word analysis and the strategic diagrams

The first term-based maps were developed in the 1980s by a team of sociologists of science based at the Centre de sociologie de l’innovation at the École des mines in Paris. They were designed to study the interaction between scientific knowledge and technological innovation, and, more generally, the relations between science and society (Callon et al. 1983). It is important to point out that the theoretical foundation of co-word analysis developed by Callon and others lies in the tradition of the science and technology studies, and in particular in the actor-network theory developed by Bruno Latour and others (Callon, Law and Rip 1998; Latour 2003). However, as a mapping method, co-word analysis can be employed without endorsing such a theoretical framework.

Callon and colleagues focused in particular on the descriptors employed by documentation services to index the content of scientific and technological publications (Callon, Courtial and Laville 1991). The method of co-word analysis, then, consists first in collecting all the descriptors of the target documents. After a process of cleaning, in which variants and synonyms are merged and not relevant descriptors removed (see Section 3.3: Data cleaning and pre-processing), the co-occurrence frequency of each pair of descriptors is calculated. Two descriptors co-occur if they are used together in the description of a single document. A co-occurrence matrix reporting the co-occurrence frequencies of each pair of descriptors is thus produced, and the raw values are then normalized (see Section 4.1.3: Normalization).

In the classic co-word methodology, as described by Callon and colleagues, the visualizations produced by co-word analysis are strategic diagrams (sometimes called cognitive maps), which are a special kind of science map that should not be confused with distance-based visualizations. To create a strategic diagram, clusters of frequently co-occurring descriptors or keywords are created by some clustering technique. Such clusters are called themes and are described by two characteristics: centrality and density. The centrality of a cluster is given by its external link, i.e., the number of links it has with other clusters. The density of a cluster is defined as the proportion between the links that are present in the keywords cluster and the number of possible links. Each theme is thus defined by two variables, centrality and density, that constitute its coordinates in the strategic diagram (He 1999). Then, the strategic diagram is divided into four quadrants (Mutschke and Quan-Haase 2001). The themes in the first quadrant are characterized by high density and high centrality and constitute the mainstream of the scientific field. The themes in the second quadrant, characterized by high centrality and low density, are unstructured themes that may be described as “bandwagon” themes. The themes in the third quadrant are characterized by high density and low centrality: they have a well-developed maturity but lacks ties to other themes in the field. They are the “ivory tower” themes. Lastly, the themes in the fourth quadrant, characterized by low centrality and low density, comprise both topics that are fading away and new topics that are emerging. In longitudinal analysis, the trajectory of a theme can be followed through the quadrants of the strategic diagram. An example of a strategic diagram can be found in (Cobo et al. 2011a, fig. 6).

The method of co-word analysis based on descriptors or other kinds of keywords may suffer from the so-called “indexer effect” (Law and Whittaker 1992). → Indexing may reflect the prejudices or points of view of the human indexers and may be inconsistent between different indexers or change over time. The indexer effect is a problem common to all human-based classifications. The study of research classification systems reveals that they cannot be taken at face value as they are the result of complex disciplinary negotiations in which both intellectual and academic interests are involved [20].

4.2.2 Co-word analysis based on automatically extracted terms

The indexer effect can be partially avoided by recurring to the automatic extraction of terms from titles, abstracts, or even the full texts of articles. However, even if this method does not rely on the choices of an indexer, it is not free of human intervention. In fact, it shifts from the choices of the indexer to the choices of the authors of scientific publications, who decide what words should be included in the titles and abstracts. The issue of meaning shift, therefore, is not solved.

NLP techniques are used to extract terms from the textual data (Taheo 2018). In general, terms are n-grams, i.e., sequences of n items (usually words). A special category of n-grams are noun-phrases, i.e., sequences that consist exclusively of nouns and adjectives and that end with a noun (e.g., text mining, network analysis). Algorithms for term detection usually comprise several steps: first, the text is split up into sentences and sentences split up into single words (tokenization), then so-called stop-words are removed (words such as “and”, “or”, etc.), and the remaining words are assigned to a part of speech, such as verb, noun, adjective, etc. (part-of-speech tagging). Noun-phrases are then identified, and, lastly, variants (e.g., plurals) are merged into one form. Once the list of noun-phrases is obtained, a fraction of them is retained. A common strategy is to select only the most relevant noun-phrases. It is important not to confuse relevance with frequency: frequency is a brute measure of the occurrences of a term, whereas relevance can be conceived as a measure of how specific a term is (Spärck Jones 1972). To understand the difference between the two, take a term such as method. In the scientific literature, it is denoted by a high frequency; however, it is scarcely relevant to characterize a scientific article, since is occurs probably in most scientific articles. Knowing that an article contains the term method is a very thin indication of its content. Because of its being too generic, method therefore has a low relevance. A term such as cardiovascular, on the other hand, is less frequent than method but conveys more information about the specific topic of an article. Therefore, it has a high relevance. Relevance scores serve to discriminate generic from specific terms. A common metric used in text mining to calculate relevance scores is TF-IDF, short for “term frequency-inverse document frequency” (Salton and McGill 1983). The underlying idea is that the relevance of a term is proportional to its occurrences and inversely proportional to the number of documents in which it occurs. Terms that occur very frequently in a few documents will score higher on TF-IDF than terms that occur very frequently in most of the documents. From this basic idea, more refined metrics to calculate the TF-IDF have been developed (Thijs 2019).

After the selection step, the number of documents in which each pair of terms appear is calculated and the corresponding co-occurrence matrix generated. The process is then the same as citation-based maps. Usually, the raw co-occurrence frequencies are normalized (see Section 4.1.3: Normalization), and then the term map is obtained in the form of a distance- or graph-based visualization (see Section 4.1.4: Visualization). Further techniques, such as clustering, can be applied to enrich the map (see Section 4.1.5: Enriching the map). Note that term-based maps thus obtained are different from the strategic diagrams produced by classic co-word methodology. They represent the topics recurring in the set of publications analyzed, rather than the properties of their themes.

An example of term-based map is shown in Fig. 7.

4.3 Other network-based maps

Besides citation networks and term networks, several other networks can be used to generate science maps. In this section, we briefly present co-authorship networks and interlocking editorship networks.

4.3.1 Co-authorship networks

Co-authorship networks are science maps in which the nodes represent authors and the links the relation of co-authorship, i.e., the number of articles authored together by each pair of authors (Newman 2001). Since co-authorship usually implies a strong relationship of collaboration (Katz and Martin 1997; Liu et al. 2005), co-authorship networks are used to reconstruct and investigate the social networks of researchers, the so-called “invisible colleges” (Crane 1972). It must be remembered, however, that co-authorship is only a proxy of scientific collaboration and that some type of collaborative work occurring in research (e.g., the work of laboratory technicians) do not lead automatically to the authorship (Laudel 2002). More generally, the practice of authorship and the requirements for being awarded authorship varies in different areas (Larivière et al. 2016). Further issues that complicate the interpretation of co-authorship data are “ghost” and “honorary” authorship, as well as the phenomenon of “hyper-authorship” (Cronin 2001). Especially in bio-medical fields, there is evidence that sometimes scientists are included as co-authors of articles even if they did not contribute to the research process (“gift” or “honorary” authorship), while others are denied legitimately earned authorship (“ghost” authorship) (Wislar et al. 2011). Honorary authorship can artificially inflate the relevance of some researchers in the co-authorship network, whereas the ghost authors remain simply invisible to standard co-authorship analysis. Furthermore, in some areas such as high-energy physics and again biomedicine, the last years have witnessed massive levels of co-authorship. Cronin has coined the term hyper-authorship to describe such a growing phenomenon of publications with hundreds, if not thousands of co-authors. For instance, in 2015, a publication in high-energy physics counted 5000 co-authors. Hyper-authorship does not only challenge the standard conception of authorship but also raises several issues about responsibility and accountability, which have been widely discussed by editors of biomedical journals (see e.g., ICMJE 2019). The presence of hyper-authorship is an important factor that must be considered when a field is investigated by of co-authorship networks.

In sum, co-authorship networks are useful tools to investigate scientific collaboration, but, since they are based on formal authorship, they should be interpreted in the light of detailed knowledge of the authorship practices of the area under investigation, taking into consideration also possible distortions due to ghost, honorary and hyper-authorship.

4.3.2 Interlocking editorship networks

Interlocking editorship is a method to map relationships between journals. Two journals are connected in the interlocking editorship network when they have at least one member of the editorial board in common (Baccini and Barabesi 2010). The editors of a scientific journal play a relevant role as “gatekeepers” of scientific disciplines since they manage the peer review process and make the final decision on the publication of articles (Crane 1967). Therefore, interlocking editorship networks can be used to reveal groups of journals whose editors endorse similar policies. Interlocking editorship networks seem to be highly correlated with journal co-citation networks, showing that similar editorial policies may reflect similar intellectual approaches to the discipline (Baccini et al. 2019).

4.4 Other types of science maps

In a broader sense of the term, we can include into the category of science maps also other visual representations of science that are not directly based on networks of scientific publications or that integrate network data with other types of data. Maps based on the analysis of patents belong to the first category and geographic maps of science to the second.

4.4.1 Maps based on patents data

Several maps can be generated from the analysis of patents, which are especially interesting for studying the dynamics of technology systems and the interaction between science and technology (Jaffe and Trajtenberg 2002). A first kind of patent map is based on the patents’ meta-data stored in patent databases. Patents, like publications, have several meta-data, such as the applications, the region where a patent is in force, the classification category, the application year, etc. Moreover, patents frequently include references to the scientific literature and other patents as well. All these meta-data can be used to generate networks of patents or networks of patent features (Federico et al. 2017). For instance, Boyack and Klavans created a map of patents based on the International Patent Classification (IPC). The map shows the relations between patents based on their co-classification: patents that are classified in the same category form clusters (Boyack and Klavans 2008). Other patent maps can be generated based on the citation network of patents, applying the equivalents of the direct linkage, bibliographic coupling, and co-citation methods to patents (Wartburg, Teichert and Rost 2005). Analyzing the references to scientific publications contained in patents allows tracing the links between scientific knowledge and technological applications (Meyer 2000), whereas, by studying the scientists that are both authors of scientific publications and inventors of patents, it is possible to map the overlap between scientific and technological literature (Murray 2002). In the last years, text-mining techniques (see Section 4.2.2: Co-word analysis based on automatically extracted terms) have increasingly been applied to patent mapping (Ranaei et al. 2019). These methods allow us to automatically extract keywords from patent documents and then built patent maps or term-maps of patents (Lee, Yoon and Park 2009; Tseng, Lin and Lin 2007).

4.4.2 Geographic maps of science

The science maps we presented so far focused on the abstract spaces of science, such as citation, term, and collaboration spaces. Classic science maps aim at visualizing patterns and trajectories occurring in these abstract dimensions. Science, however, is also a concrete activity occurring in specific places on our planet (Finnegan 2015). In fact, science is produced in geographic sites that are not equally distributed on the Earth but are concentrated in few, highly developed areas. From those sites, scientific knowledge travels, as publications and researchers move around the globe. Geographic maps of science aim at describing the spatial diffusion of scientific activities and the circulation of scientific knowledge in the geographic space. They are a key research topic in spatial scientometrics (Frenken, Hardeman and Hoekman 2009) and an important tool in the geography of science (Livingstone 2003). By showing the unequal spatial distribution of science and research in different countries, they offer interesting insights into the structure of the global research system (Wichmann Matthiessen, Winkel Schwarz and Find 2002).

Geographic maps of science are created by locating on a geographic map, e.g., a map of the Earth, the nodes of the network we focus on. For instance, the authors of a co-authorship network can be placed on the map based on the coordinates of their research institutions. Or a network of cities collaborating in the production of scientific papers can be constructed and plotted on a map (Leydesdorff and Persson 2010). Or citation flows between universities can be geographically visualized (Börner et al. 2006). The tool CiteSpace (see the Appendix) provides a specific utility to generate geographic maps of science.

5. The representation of time in science mapping

There are different options to include the dimension of time into science maps. A first option consists in longitudinal mapping (Cobo et al. 2011a; Petrovich and Buonomo 2018; Petrovich and Tolusso 2019): based on the publication year of the bibliographic records, subsets of publications belonging to different timespans are created and each of them is mapped separately. Note that any mapping technique can be used, from co-citation analysis to co-word analysis. Each map will represent a sort of “photograph” of the field under investigation in a certain timespan. The sequence of maps allows visualizing the temporal dynamics of the field. A second option consists in representing on the same map the trajectories of the units that change their relative position is subsequent maps (White and McCain 1998). A third option is animating the map: instead of a static visualization, a short movie is created interpolating the layouts of the network in different moments (Leydesdorff and Schank 2008).

The first maps including the temporal dimension, however, used a timeline to represent time. Garfield called them “historiographs” (Garfield 2004). In the timeline-based approach, each node of the network (classically, a publication in a citation network) is linked to a specific point in time (e.g., the publication year). The visualization, then, uses two dimensions: the vertical one is the timeline, whereas the horizontal one is used to represent the relatedness of the items (Waltman and van Eck 2014). The result is a citation network spread over a timeline. Garfield tested the validity of the historiographs as tools for reconstructing the history of science by comparing the narration of the discovery of the DNA written by Asimov with the historiograph based on the bibliographies of the corresponding publications (Garfield 1973). He found a good overlap between the two: the key events in the discovery according to Asimov appeared also in the historiograph.

Alluvial maps are another form of timeline-based visualizations. Starting from different phases in the evolution of a network, the networks relative to each phase are divided into different clusters, and then the trajectories of corresponding clusters in subsequent networks are visualized as a stream. The fusions and fissions of clusters over time is visualized as multiple streams flow over time (Rosvall and Bergstrom 2010) [21].

By combining co-citation mapping and temporal visualization, an amazing visualization of the temporal development of the journal Nature in the last 150 years was recently produced (Gates et al. 2019) [22].

6. Interpreting a science map

Interpreting a science map means linking the visual and geometrical properties of the map to substantive features of the mapped area or field. For instance, clusters of co-cited publications can be mapped to scientific sub-specialties or research topics, bibliographic coupling networks can be interpreted as the research fronts of scientific specialties, co-authorship networks as invisible colleges of scientists, and clusters of journals sharing many editors as structures of academic power. The interpretation of science maps typically involves close interaction with experts of the mapped domain, i.e., experienced researchers that have a deep, albeit qualitative, knowledge of the structure of the target field (Tijssen 1993). Good science maps, however, should not be mere quantitative counterimages of the qualitative knowledge of the domain experts. They should provide also new insights and useful knowledge for science policy purposes.

An important aspect to consider in the interpretation is the level of analysis of the science map, i.e., the units of analysis and the type of relationship displayed by the map. Units and relations do not only affect the scale of the map, but also the dimension of the scientific enterprise that is captured. Term-based maps and citation-based maps using the document as unit of analysis highlight the epistemic or cognitive dimension of science, what philosophers of science call the “context of justification” (Lucio-Arias and Leydesdorff 2009). They show the shared epistemic base of a field (Persson 1994). However, they can overemphasize the stability of scientific knowledge, overshadowing the continuous social negotiation of scientific claims (Knorr-Cetina 2003). Co-authorship maps. author co-citation analysis, and interlocking editorship maps, on the other hand, shed light on the social network underlying science, i.e., the “context of discovery” in philosophical terms. When the journal is selected as unit of analysis, the communication system is highlighted (Cozzens 1989). Hence, the different methodologies of science mapping offer a partial representation of the multi-dimensional nature of science and scholarship, that should be considered during the interpretative phase.

General theories and models of the structure and dynamics of science can also help in the interpretation of science maps, providing general interpretative insights (Boyack and Klavans 2019; Chen 2017; Scharnhorst, Börner and Besselaar 2012). At the same time, however, it is pivotal to consider the specific academic and epistemic cultures of the field under study. The interpretation of a science map of a social scientific area, for instance, cannot be based on exactly the same concepts than the interpretation of a science map of a biomedical area, as the social sciences and biomedicine differ in terms of research methods, epistemic culture, specialized terminology, use of the references, centrality of the journal system, and so on.

The humanities are a good case in point to highlight the importance of the specificity of research areas. Science mapping and, more generally, scientometrics and bibliometrics have mainly focused on the sciences since the times of Price and Garfield (Franssen and Wouters 2019). Bibliometric methods such as citation analysis were tailored to the citation norms and practices of the sciences. In the humanities, however, citations are frequently used not only to refer to other scholars’ work but also to point out sources and primary materials, the equivalent of experimental data for the sciences (Hellqvist 2009). Negative or contradictory citations of the works of other scholars are relatively more common than in the sciences. In fields such as philosophy, where argumentation is the key epistemic practice, critical citations play a central role (Petrovich 2018). These field-specific citation practices must be considered in the interpretation of citation-based science maps of humanistic areas (Hammarfelt 2016). Moreover, publications in the humanities frequently do not target (only) fellow scholars, but also the wider public audience (Nederhof 2006). This changes the level of specialized and standardized terminology used and, consequently, affect the capacity of term-based science maps to capture themes and topics [23]. To these interpretive caveats, one should add the limitations of the existing databases to adequately capture publications in the humanities, as they are often published as monographs and in national languages (Hammarfelt 2017).

7. Science maps and the philosophy of science

In this section, we deal with some epistemological and sociological topics related to science mapping. We start by asking in what sense science maps offer objective representations of science, then, we discuss the difference between the published side of science and science in the making, and, lastly, we examine in more detail the meaning of citations.

7.1 On the objectivity of science maps

Are science maps an objective representation of the structure and dynamics of science? Clearly, the answer greatly depends on the definition of objectivity we endorse (Daston and Galison 2007; Reiss and Sprenger 2017).

In the previous sections, we saw how the creation of a science map involves several methodological and technical decisions from the science cartographer, such as the unit of analysis, the mapping technique, the normalization method, the visualization approach, the clustering algorithm, and so on (see Section 3: Building a science map). Each decision affects the results and lead to different science maps. Therefore, science maps, even when they are generated by computer software, should not be conceived as free of human intervention. Human choices occur frequently in the science map workflow and should be made transparent in order to warrant the reproducibility of science maps (Rafols, Porter and Leydesdorff 2010). Therefore, if we equate objectivity with the “lack of human intervention” (the so-called mechanical objectivity), then science maps, like any other map, are not “objective”. Rather, they result from a combination of the features of the mapped field, on the one hand, and the methodological decisions of the science cartographer on the other hand. However, we should acknowledge that no map — including geographic maps — is “objective” in this sense. On the other hand, if objectivity is intended as inter-subjective agreement, then science maps are objective in so far as they can be reproduced by different researchers, as long as that they follow the same methodology.